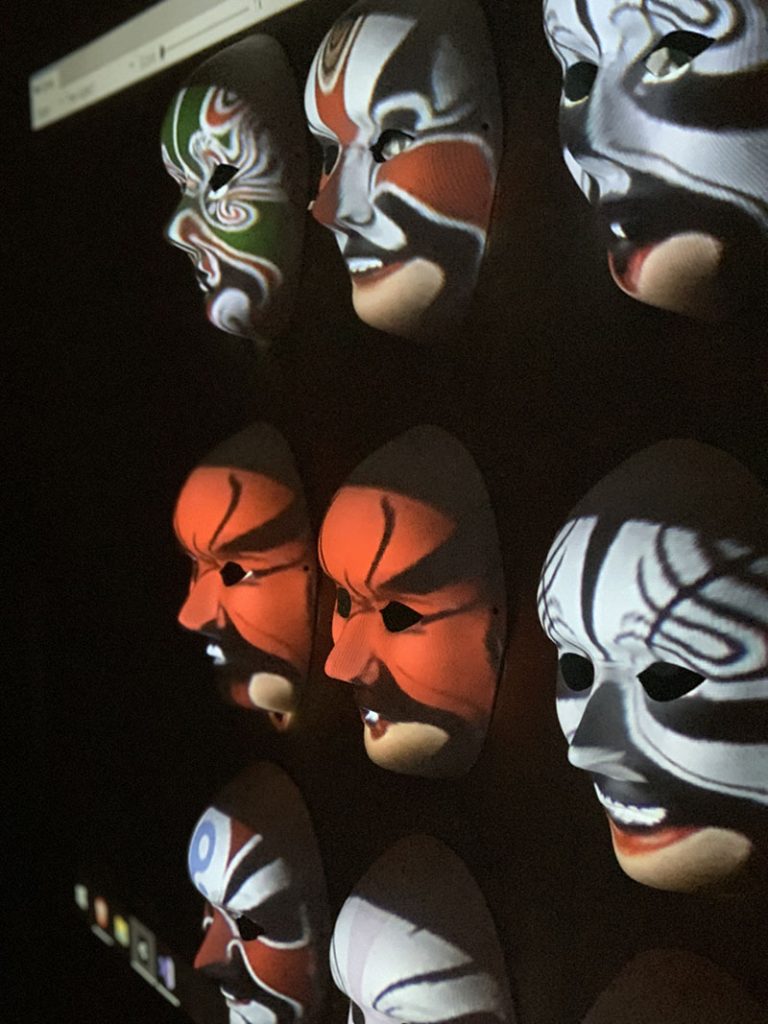

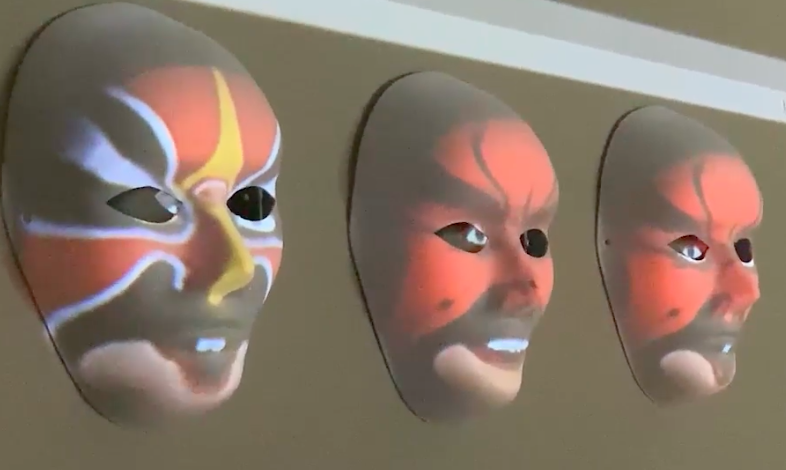

The concept of our project is to create an interactive and multimedia experience that helps the user to understand the art of BianLian. Unlike the artworks that I just mentioned where the effects are static and pre-recorded, We aim to focus on the input of the user. The projected results are fully interactive and dynamic based on the input of the user, for example, the facial emotion and body gesture. This way the user will have a strong participation and connection to the virtual environment, hence better understanding what we are trying to express. The role of the user is not just an audience but also a performer.

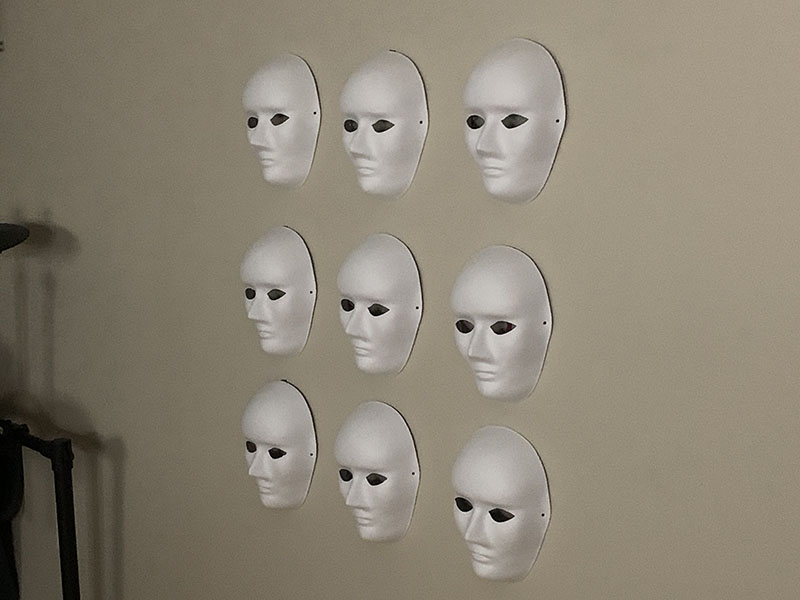

We will use the iPhone’s face recognition work to obtain the user’s facial expression information and then transmit it to unity on the computer. Unity will use the data to determine what emotions are expressed in the user’s facial expressions, thus corresponding to the same facial expressions (which we summarized in advance, such as happy, angry, sad, etc.). Our masks are arranged in a three-by-three matrix, and in order, the first user’s expression will be saved to the first mask, and so on. Therefore, later users can not only interact with their own facial expressions, but also see the data experience of previous users.

LianMing Sun, Riyuan Zhuang, Han Ye, Yikun Peng